Gemini Contest Submission

AI Interviewer App

Introducing our AI interviewer app, designed to help interviewees with their interview preparation.

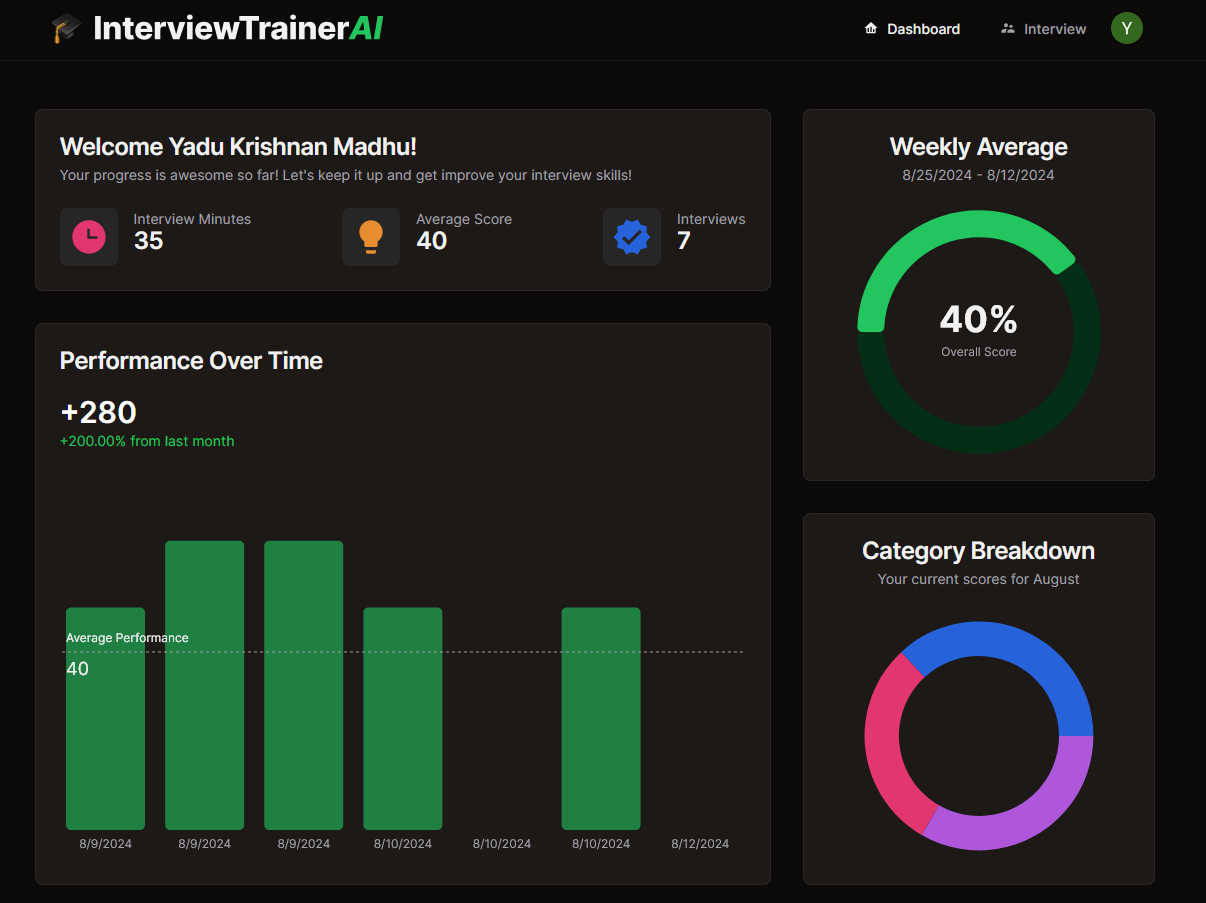

The dashboard has a all the available metrics about the interviews users have completed.

The dashboard has a all the available metrics about the interviews users have completed.

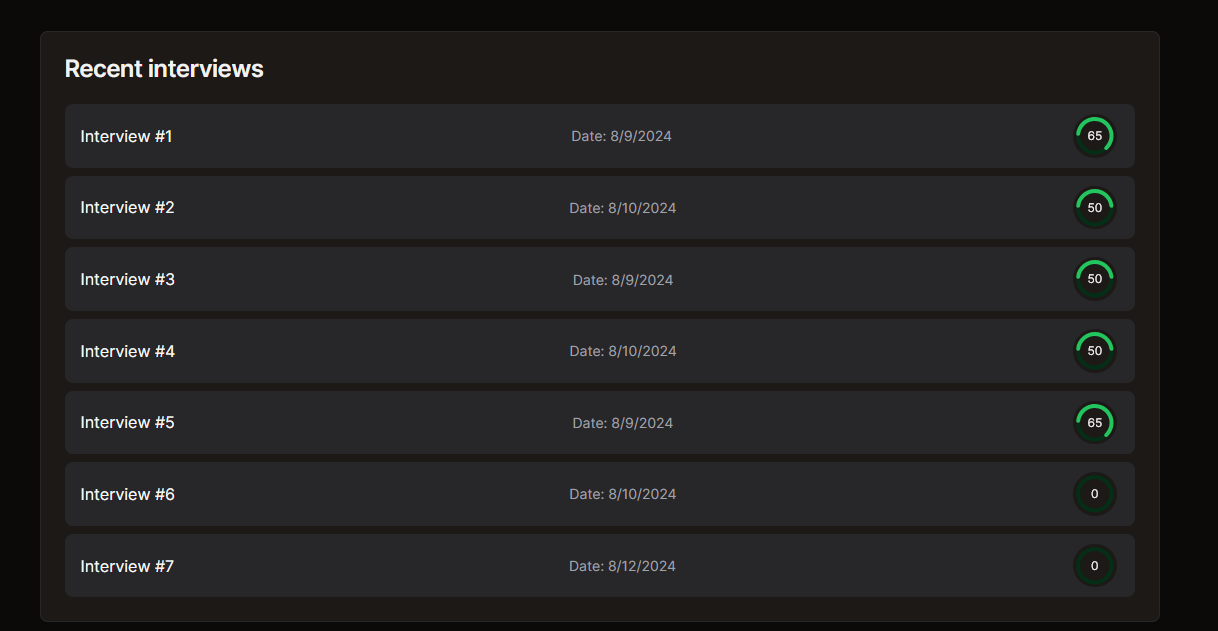

If you scroll down, users can see a history of the interviews they have completed.

If you scroll down, users can see a history of the interviews they have completed.

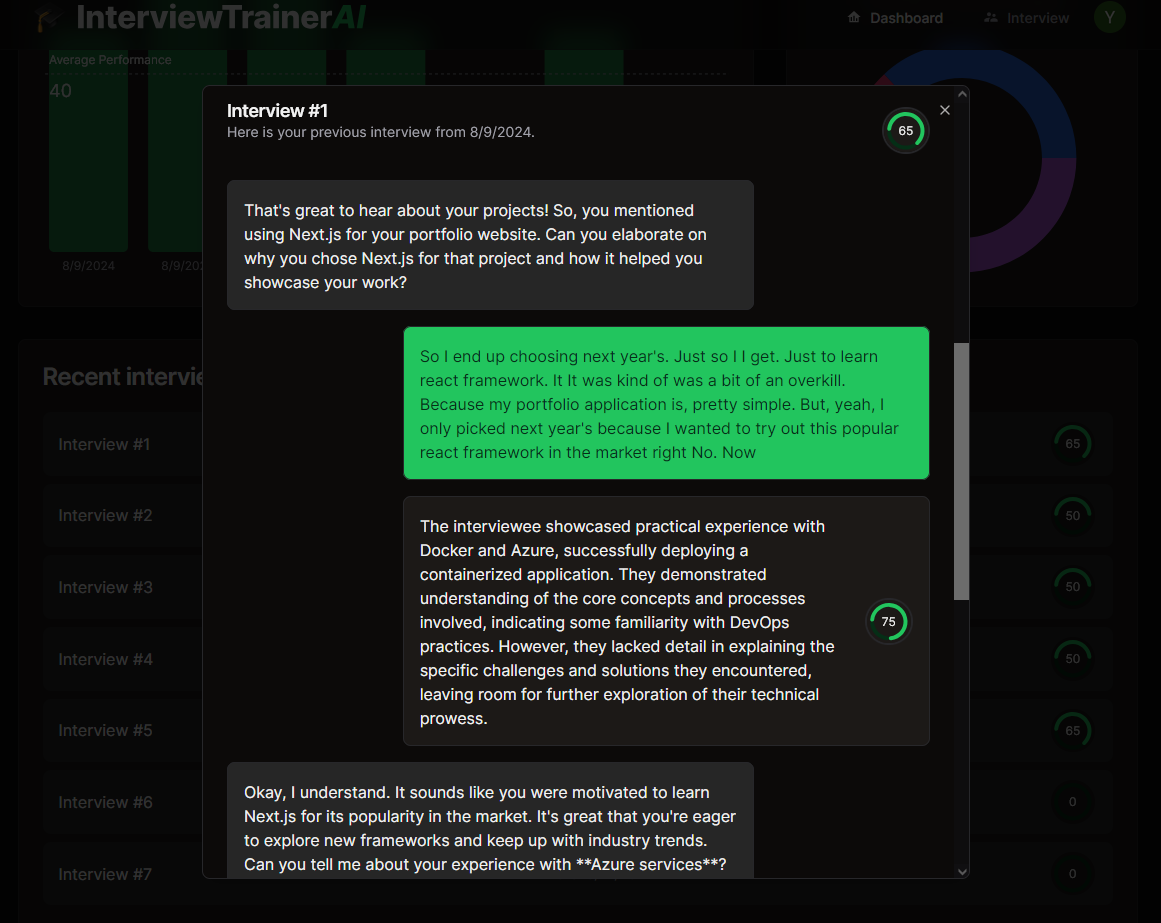

A detailed summary of user responses can be seen by picking out one of the responses.

A detailed summary of user responses can be seen by picking out one of the responses.

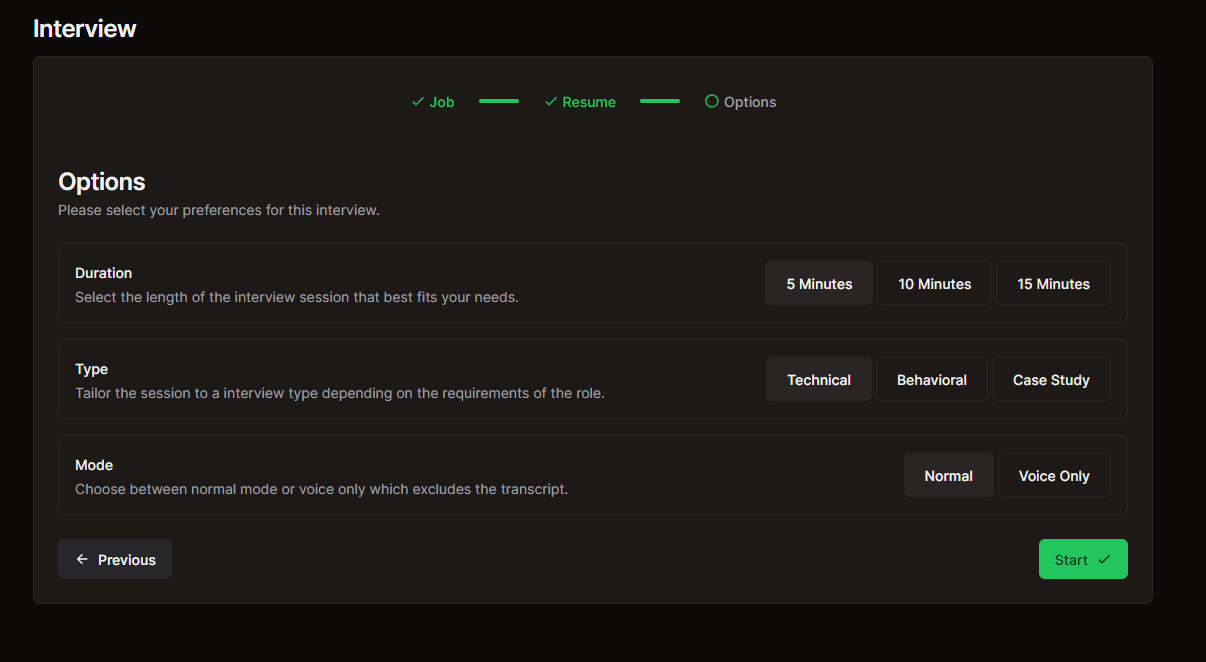

Here are the currently available interview settings to tweak the experience based on the users' needs.

Here are the currently available interview settings to tweak the experience based on the users' needs.

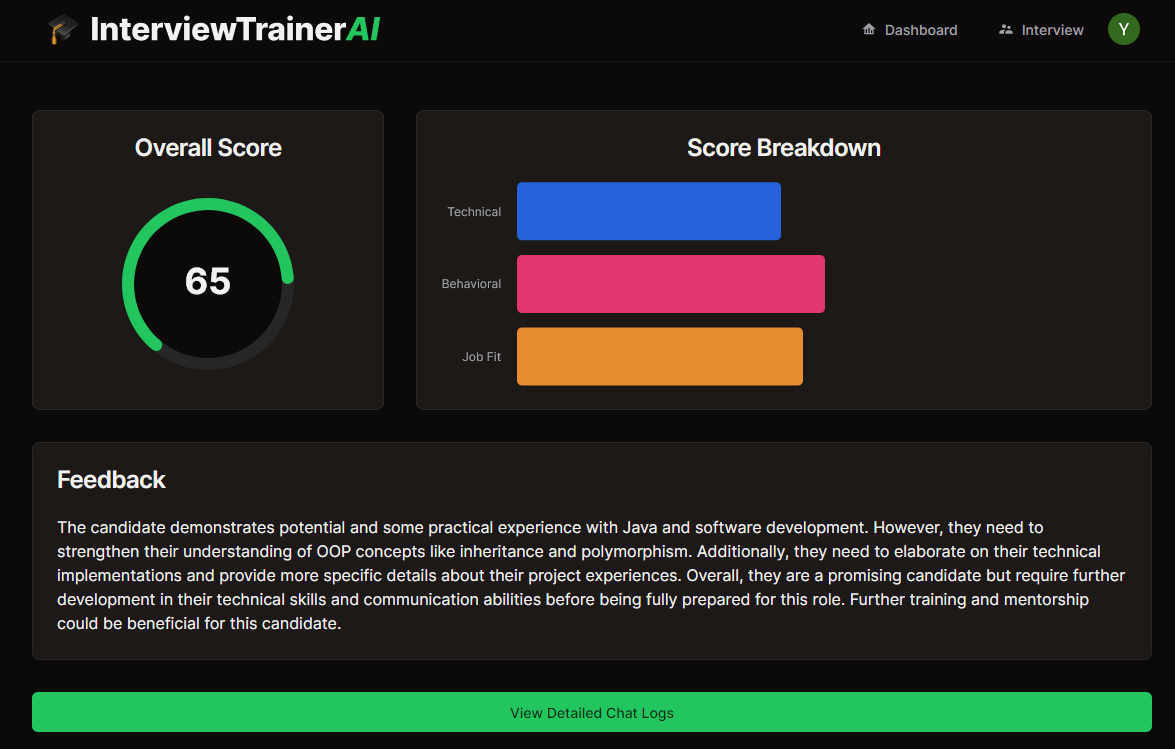

This is the results page after interview finishes

This is the results page after interview finishes

Implementation details

I worked on a number of things in this project. One of the more notable things is the Chat Component. Here are the number of states in this just 1, but a core component:

const [chat, setChat] = useState<ChatMessage[]>([]);

const [transcript, setTranscript] = useState<string>("");

const [isRecording, setIsRecording] = useState<boolean>(false);

const [amplitude, setAmplitude] = useState<number>(2.3);

const [isDialogOpen, setIsDialogOpen] = useState<boolean>(false);

const [resume, setResume] = useState<Resume | undefined>();

const [isLoading, setIsLoading] = useState<boolean>(true);

const [isSocketSetupLoading, setIsSocketSetupLoading] =

useState<boolean>(true);

const [confirmedNavigation, setConfirmedNavigation] =

useState<boolean>(false);

const [hasInterviewStarted, setHasInterviewStarted] =

useState<boolean>(false);

const [seconds, setSeconds] = useState(0);

const [interviewEnded, setInterviewEnded] = useState<boolean>(false);

const [isDoneDialogOpen, setIsDoneDialogOpen] = useState<boolean>(false);

const [geminiAnalyser, setGeminiAnalyser] = useState<AnalyserNode>();

const [geminiAudioContext, setGeminiAudioContext] = useState<AudioContext>();

const [resultsLoading, setResultsLoading] = useState<boolean>(true);

const socketRef = useRef<WebSocket | null>(null);

const mediaRecorderRef = useRef<MediaRecorder | null>(null);

const socketIntervalRef = useRef<NodeJS.Timeout | null>(null);

const analyserRef = useRef<AnalyserNode | null>(null);

const isRecordingRef = useRef<boolean>(isRecording);

const audioContextRef = useRef<AudioContext | undefined>();

const micAudioContextRef = useRef<AudioContext | undefined>();

const streamRef = useRef<MediaStream | undefined>();

const sourceRef = useRef<MediaStreamAudioSourceNode | undefined>();

const geminiRef = useRef<InterviewBot>(new InterviewBot());

const locationStateRef = useRef<InterviewProps | undefined>();

const interviewResultRef = useRef<Interview>();

const { db } = useFirebaseContext();

const { user } = useAuthContext();

const location = useLocation();

const navigate = useNavigate();This is definitely excessive I do have plans on refactoring it and pulling components out of the file to only describe the chat logic, but thats for another day.

While I was working on the TTS functionality, I was under the assumption that Firebase Cloud Function endpoints can only send simple HTTP responses, however, I figured out from this Link that I can pipe my response to stream audio blobs for TTS.

const responseBody = await getTTS(text)

res.setHeader('Content-Type', 'audio/mp3')

responseBody.pipe(res);where getTTS returns a Readable.